This post is part of my Game Math Series.

Also, this post is part 1 of a series (part 2) leading up to a geometric interpretation of Fourier transform and spherical harmonics.

Fourier transform and spherical harmonics are mathematical tools that can be used to represent a function as a combination of periodic functions (functions that repeat themselves, like sine waves) of different frequencies. You can approximate a complex function by using a limited number of periodic functions at certain frequencies. Fourier transform is often used in audio processing to post-process signals as combination of sine waves of different frequencies, instead of single streams of sound waves. Spherical harmonics can be used to approximate baked ambient lights in game levels.

We’ll revisit these tools in later posts, so it’s okay if you’re still not clear how they can be of use at this point. First, let’s start somewhere more basic.

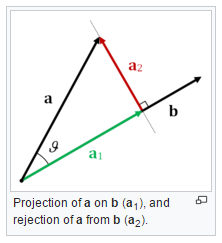

Projecting a Vector onto Another

If you have two vectors ![]() and

and ![]() , projecting

, projecting ![]() onto

onto ![]() means stripping out part of

means stripping out part of ![]() that is orthogonal to

that is orthogonal to ![]() , so the result is the part of

, so the result is the part of ![]() that is parallel to

that is parallel to ![]() . Here is a figure I borrowed from Wikipedia:

. Here is a figure I borrowed from Wikipedia:

The dot product of ![]() and

and ![]() is a scalar equal to the product of magnitude of both vectors and the cosine of the angle (

is a scalar equal to the product of magnitude of both vectors and the cosine of the angle (![]() , using the figure above) between the vectors:

, using the figure above) between the vectors:

![]()

Another way of calculating the dot product is adding together the component-wise products. If ![]() and

and ![]() , then:

, then:

![]()

A follow-up to the alternate formula above is the formula for vector magnitude. The magnitude of a vector is the square root of the dot product of the vector with itself:

![]()

The geometric meaning of the dot product ![]() is the magnitude of the projection of

is the magnitude of the projection of ![]() onto

onto ![]() scaled by

scaled by ![]() . Dot product is commutative, i.e.

. Dot product is commutative, i.e. ![]() , which means that

, which means that ![]() is also equal to the magnitude of the projection of

is also equal to the magnitude of the projection of ![]() onto

onto ![]() scaled by

scaled by ![]() .

.

So if you want to get the magnitude of the projection of ![]() onto

onto ![]() , you need to divide the dot product by the magnitude of

, you need to divide the dot product by the magnitude of ![]() :

:

![]()

To get the actual projected vector of ![]() onto

onto ![]() , multiply the magnitude with the unit vector in the direction of

, multiply the magnitude with the unit vector in the direction of ![]() , denoted by

, denoted by ![]() :

:

![Rendered by QuickLaTeX.com \[ proj(\vec{a}, \vec{b}) = (\dfrac{\vec{a} \cdot \vec{b}}{|\vec{b}|}) \hat{b} = (\dfrac{\vec{a} \cdot \vec{b}}{{|\vec{b}|}^2}) \vec{b} = (\dfrac{\vec{a} \cdot \vec{b}}{\vec{b} \cdot \vec{b}}) \vec{b} \]](https://allenchou.net/wp-content/ql-cache/quicklatex.com-2cdfe25f6a724f5263407643823dc8f3_l3.png)

One important property of dot product ![]() is: if it’s positive, the two vectors point in roughly the same direction (

is: if it’s positive, the two vectors point in roughly the same direction (![]() ); if it’s zero, the vectors are orthogonal (

); if it’s zero, the vectors are orthogonal (![]() ); if it’s negative, the vectors point away from each other (

); if it’s negative, the vectors point away from each other (![]() ).

).

For the dot product of two unit vectors, like ![]() , it’s just

, it’s just ![]() . If it’s 1, then the two vectors point in exactly the same direction (

. If it’s 1, then the two vectors point in exactly the same direction (![]() ); if it’s -1, then the two vectors point in exactly opposite directions (

); if it’s -1, then the two vectors point in exactly opposite directions (![]() ). So, in order to measure how close the directions two vectors point in, we can normalize both vectors and take their dot product.

). So, in order to measure how close the directions two vectors point in, we can normalize both vectors and take their dot product.

Let’s say we have three vectors: ![]() ,

, ![]() , and

, and ![]() . If we want to determine which of

. If we want to determine which of ![]() and

and ![]() points in a direction closer to where

points in a direction closer to where ![]() points, we can just compare the dot products of their normalized versions,

points, we can just compare the dot products of their normalized versions, ![]() and

and ![]() . Whichever vector’s normalized version has a larger dot product with

. Whichever vector’s normalized version has a larger dot product with ![]() points in a direction closer to that of

points in a direction closer to that of ![]() .

.

A metric often used to measure the difference between two data objects is the root mean square error (RMSE) which is the square root of the average of component-wise errors. For vectors, that means:

![]()

It kind of makes sense, because it is exactly the magnitude of the vector that is the difference between ![]() and

and ![]() scaled by

scaled by ![]() :

:

![]()

It’s also the square root of the dot product of the difference vector ![]() with it self scaled by

with it self scaled by ![]() :

:

![]()

Here’s an important property of projection:

The projection of a vector ![]() onto another vector

onto another vector ![]() is the vector parallel to

is the vector parallel to ![]() that has the minimal RMSE with respect to

that has the minimal RMSE with respect to ![]() . In other words,

. In other words, ![]() gives you the best scaled version of

gives you the best scaled version of ![]() to approximate

to approximate ![]() .

.

Also note that if ![]() is larger than

is larger than ![]() , it means

, it means ![]() has a smaller RMSE than

has a smaller RMSE than ![]() with respect to

with respect to ![]() ; thus,

; thus, ![]() points in a direction closer to that o

points in a direction closer to that o ![]() than

than ![]() does.

does.

Now we’re finished with vectors. It’s time to move onto curves.

“Projecting” a Curve onto Another

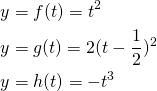

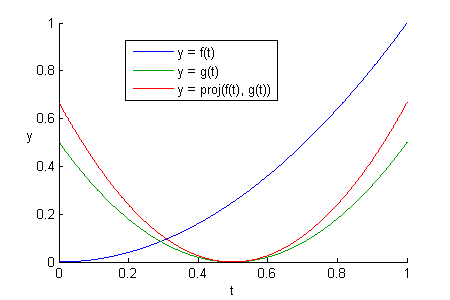

Let’s consider these three curves:

When working with curves, as opposed to vectors, we need to additionally specify an interval of of interest. For simplicity, we will consider ![]() for the rest of this post.

for the rest of this post.

Below is a figure showing what they look like side-by-side within our interval of interest:

Just like vectors, “projecting” a curve ![]() onto another curve

onto another curve ![]() gives you the best scaled version of

gives you the best scaled version of ![]() to approximate

to approximate ![]() , and the “projection” has minimal RMSE with respect to

, and the “projection” has minimal RMSE with respect to ![]() . To compute the RMSE of curves, we need to first figure out how to compute the “dot product” of two curves.

. To compute the RMSE of curves, we need to first figure out how to compute the “dot product” of two curves.

Recall that the dot product of vectors is equal to the sum of component-wise products:

![]()

Mirroring that, let’s sum up the products of samples of curves at regular intervals, and we normalize the sum by dividing it with the number of samples, so we don’t get drastically different results due to different number of samples. If we take 10 samples between ![]() to compute the dot product of

to compute the dot product of ![]() and

and ![]() , we get:

, we get:

![Rendered by QuickLaTeX.com \[ f(t) \cdot g(t) = \frac{1}{10} \sum_{i = 1}^{10} f(\frac{i}{10}) g(\frac{i}{10}) \]](https://allenchou.net/wp-content/ql-cache/quicklatex.com-100eaf5f9c6d8e9ce8689bd418bc6074_l3.png)

The more samples we use, the more accuracy we get. What if we take an infinite number of samples so we get the most accurate result possible?

![]()

This basically turns into an integral:

![]()

So there we have it, one common definition of the “dot product” of two curves:

The integral of the product of two curves over the interval of interest.

Copying the formula from vectors, the RMSE between two curves ![]() and

and ![]() is:

is:

![]()

In integral form, it becomes:

![Rendered by QuickLaTeX.com \[ RMSE(f(t), g(t)) = \sqrt{\int_{0}^{1} (f(t) - g(t))^2 dt} \]](https://allenchou.net/wp-content/ql-cache/quicklatex.com-b34918303a077278b25ca535ef38086b_l3.png)

The “mean” part of the error is omitted since it’s a division by 1, the length of our interval of interest.

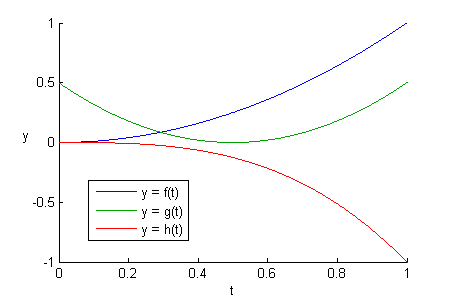

To find out which one of the “normalized” version of ![]() and

and ![]() has less RMSE with respect to the normalized version of

has less RMSE with respect to the normalized version of ![]() , we take the dot products of the normalized versions of the curves:

, we take the dot products of the normalized versions of the curves:

The dot product of ![]() and

and ![]() is larger than that of

is larger than that of ![]() and

and ![]() . That means

. That means ![]() has a lower RMSE than

has a lower RMSE than ![]() with respect to

with respect to ![]() .

.

Drawing analogy from vectors, ![]() is conceptually “pointing in a direction” closer to that of

is conceptually “pointing in a direction” closer to that of ![]() than

than ![]() does.

does.

Now let’s try finding the best scaled version of ![]() that has minimal RMSE with respect to

that has minimal RMSE with respect to ![]() , by computing the projection of

, by computing the projection of ![]() onto

onto ![]() :

:

![]()

And this is what ![]() ,

, ![]() , and

, and ![]() look like side-by-side:

look like side-by-side:

The projected curve ![]() is a scaled-up version of

is a scaled-up version of ![]() that is a better approximation of

that is a better approximation of ![]() than

than ![]() itself; it is the best scaled version of

itself; it is the best scaled version of ![]() that has the least RMSE with respect to

that has the least RMSE with respect to ![]() .

.

Now that you know how to “project” a curve onto another, we will see how to approximate a curve with multiple simpler curves while maintaining minimal error.

Great article! Applying linear algebra techniques for function was very clever idea. Now I can see how it is really related to the Fourier transform. Can’t wait to see next part 🙂